GSOC Week 7: Benchmarking

I started this week where I ended last week. I finished the benchmarking series where I test the database suitability tool with a human mzML and databases from multiple other organisms.

As I already described in my last blog post the tested databases where from multiple primates, some other non-related species and one shuffled human database.

After the first run finished I was a little worried because no database scored under 70 % suitability. This means something is not working properly. Luckily it didn't take me much time to figure out what it was.

I forgot to filter for FDR. This will obviously result in a wrong output since all hits are counted regardless of their q-value. So, once again I needed to tweak the tool. I added a user input for a FDR, checked for this FDR before counting hits as either 'db' or 'novo' and changed the test and documentation. I then created a PR for those changes. This PR was merged without much discussion.

I than ran the benchmarking series again and, who would have thought, now the results look much more promising. They range from 84.7 % for a chimp database to 52.5 % for a crow database and 3.5 % for the shuffled human database. These results do not match the paper results exactly, but the show the same trend.

To reproduce the paper results I than started to write a python script which simulates the workflow described in the paper. Because the last time I wrote python code, this took me quite some time and I didn't get this done this week.

Currently the script takes an mzML, a novo protein (as FASTA) and the database to search with (as FASTA) as its input, then calculates a decoy database and finally uses comet to search the mzML with the database. The decoy database is build using the OpenMS util 'DecoyDatabase', since the paper doesn't specifiy how this was done by them. What is still missing is an FDR calculation with PeptideProphet and after that the calculation of the suitability.

I was wondering how PeptideProphet was used by the authors for FDR calculations since PeptideProphets scoring is based on probability distributions calculations and not on FDR.

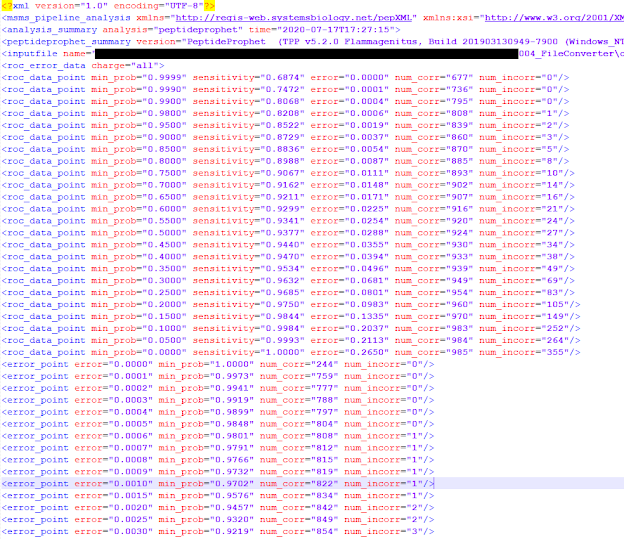

I asked my mentors about this and apparently there is a solution. The header of a .pep.xml produced by PeptideProphet with the option 'ZERO' (meaning it does not filter at all) looks like this:

The 'roc_error_data' table is the important one here. You can see how many 'correct' and 'incorrect' hits are written for a certain probability. Here one can calculate which probability corresponds to which FDR by just calculating the FDR num_incorr / (num_incorr + num_corr). After that we got a probability which corresponds to our FDR and we can just filter for this probability instead.

I don't no if the authors did it like this too. They don't write anything one PeptideProphet configurations.

But anyway, this is how I am going to do it. This will have to wait for next week though. Because the last thing I did, was setting up a github and a repl repository for the benchmarking scripts and some data.

Comments

Post a Comment